A local CI/CD pipeline offers several benefits for software development teams. It can help improve the security of your code by keeping sensitive information on companies local machine. This reduces the risk of security breaches and other issues that can arise when code is stored on a remote server. Moreover, setting up a local CI/CD pipeline can be more cost-effective than using a remote pipeline, especially for small projects. Using open-source tools and running the pipeline on a local server or virtual machine, you can save money on hosting and infrastructure costs.

By the recent change in Docker hub account policy, if accounts do not upgrade to a paid plan before April 14, 2023, their organization's images may be deleted after 30 days.

In this article we go through following steps to setup a local CI/CD pipeline for your small to medium sized team :

- Setup Kubernetes

- Setup local docker registry on Kubernetes

- Setup Gitblit on Kubernetes

- Setup Jenkins on Kubernetes

- Configure Spring boot Backend pipeline in Jenkins

- Configure Flask Backend pipeline in Jenkins

- Configure React Frontend pipeline in Jenkins

- Code in Frontend and Backend projects

- Run Frontend and Backend pipelines

We are going to setup all the CI/CD nodes including Source code repository (Gitblit), Automation server (Jenkins) and Docker image repository on top of Kubernetes, this figure shows the final architecture of pipeline :

Setup Kubernetes

Kubernetes works on top of Docker, it undertakes tasks including creating container , deployment and configuration. There exist several methods and tools to setup a K8s such as Docker Desktop, minikube, kind, k3s, kubeadm and etc. In this article for the sake of simplicity I setup K8s using Docker Desktop.

If you haven’t already installed the Docker Desktop, download and install latest version of it which is compatible to your OS from the official Docker website. Docker is originally made for Linux, but thanks to Hyper-v and WSL 2 features you can also run it in windows. So, you need to enable/install one of them in before Docker installation.

In the first installation step where the installer ask you to use WSL 2 instead of Hyper-V, you can check the box if you have WSL 2 installed and click OK. WSL 2 works on top of Hyper-V, but it doesn’t require Hyper-V to be enabled.

Docker Desktop comes with a graphical user interface that allows configuring and installing Kubernetes. Open the Docker Desktop application and go to the settings menu, under the Kubernetes tab, check the Enable Kubernetes checkbox and click Apply & Restart.

After Docker Desktop restarts, use the following command to verify that the Kubernetes cluster is running :

kubectl cluster-infoIf the installation is completed successfully, the above command should display information about your Kubernetes cluster.

We need to install Kubernetes dashboard to have graphical interface in order to work with K8s and obtain an overview of the applications that are active on the cluster. It requires manual setup :

First execute below command :

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.6.0/aio/deploy/recommended.yamlNext, create a proxy server between the client and the Kubernetes API server. This allows the user to interact with the Kubernetes API server :

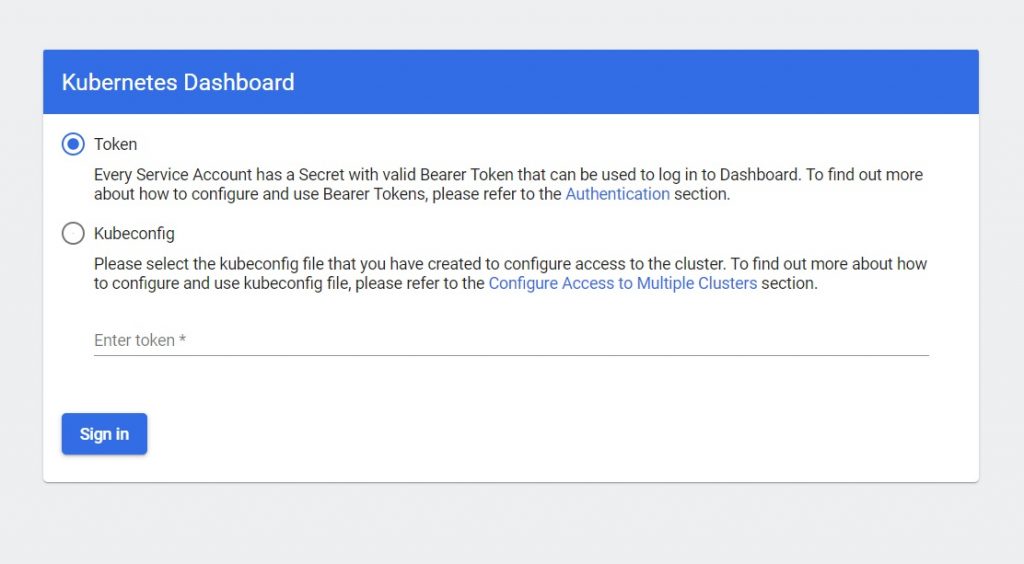

kubectl proxyThe Dashboard can be accessed at http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/. However, the login page needs you to provide token which you can create with following command :

kubectl -n kubernetes-dashboard create token admin-userSetup Local Docker Registry On Kubernetes

Besides many advantages of using the dockerhub , it comes with its own set of limitations. For example, anonymous users and members using free plan are limited to 100 and 200 container image pull requests per 6 hours. In such cases, private local repository would be a better choice. It enables us to have a image repository that in addition to unlimited pull and push requests, it gives us control over storage options and many other options per our needs. Let’s get to the point :

First of all let’s create a namespace for our CI/CD pipeline in Kubernetes with the following command :

kubectl create namespace cidi-pipelineNext, with openssl and htpasswd tools we build TLS certificate and implement user authentication for our repository.

TLS certificate :

mkdir certs

openssl req -x509 -newkey rsa:4096 -days 365 -nodes -sha256 -keyout certs/tls.key -out certs/tls.crt -subj "/CN=docker-registry" -addext "subjectAltName = DNS:docker-registry"Adding authentication :

mkdir auth

docker run --rm --entrypoint htpasswd registry:2.6.2 -Bbn admin 1234@5 > auth/htpasswdLet’s look at breakdown of the each parts of the above command. In the above command with “--rm" flag we tell Docker to automatically remove the container when it’s done running. With “--entrypoint htpasswd" we set the entrypoint for the container to be htpasswd, which is a tool used to manage user authentication files. “registry:2.6.2” is the name of the Docker image that our container will be based on. “-Bbn ... ...“ is the htpasswd option to create an encrypted password for the user admin with the password 1234@5. “-Bbn” consists of three flags. The B flag tells the htpasswd to use bcrypt encryption algorithm for password and b flag get the password from the command line instead of prompting for it, and n flag shows the results on standard output instead of updating a file. “> auth/htpasswd“ redirects the output of the htpasswd command to a file named htpasswd in the auth directory which we created at first line. This file will contain the username and encrypted password that were just created.

Next, we generate two types of secrets – one TLS type secret and another Generic type secret – which we will use to mount our certificate and password, respectively.

kubectl create secret tls certs-secret --cert=certs/tls.crt --key=certs/tls.key -n cicd-pipeline

kubectl create secret generic auth-secret --from-file=auth/htpasswd -n cicd-pipelineValues of htpasswd file will be saved in the Generic Secret we just created in Kubernetes.

The registry-volume.yaml config file creates the Persistent Volume for our local Docker registery:

apiVersion: v1

kind: PersistentVolume

metadata:

name: docker-repo-pv

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

hostPath:

path: /tmp/repository

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: docker-repo-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1GiaccessModes specify the ways in which the persistent volume can be accessed by the associated pod(s). Here, ReadWriteOnce indicates that the our persistent volume can be mounted as read-write by a single node in the cluster at a time.

You can apply it to K8s cluster via Kubernetes dashboard or in command line. Here I’ll do it in command line:

kubectl create -f registry-volume.yaml -n cicd-pipelineAfter creating both Persistent Volume and a Persistent Volume Claim, we can create docker registry pod utilizing this volume.

Apply registry-pod-service.yaml config file to your Kubernetes cluster to create the pod and service in cicd-pipeline namespace :

apiVersion: v1

kind: Pod

metadata:

name: docker-registry-pod

labels:

app: registry

spec:

containers:

- name: registry

image: registry:2.6.2

volumeMounts:

- name: repo-vol

mountPath: "/var/lib/registry"

- name: certs-vol

mountPath: "certs"

readOnly: true

- name: auth-vol

mountPath: "auth"

readOnly: true

env:

- name: REGISTRY_AUTH

value: "htpasswd"

- name: REGISTRY_AUTH_HTPASSWD_REALM

value: "Registry Realm"

- name: REGISTRY_AUTH_HTPASSWD_PATH

value: "auth/htpasswd"

- name: REGISTRY_HTTP_TLS_CERTIFICATE

value: "certs/tls.crt"

- name: REGISTRY_HTTP_TLS_KEY

value: "certs/tls.key"

volumes:

- name: repo-vol

persistentVolumeClaim:

claimName: docker-repo-pvc

- name: certs-vol

secret:

secretName: certs-secret

- name: auth-vol

secret:

secretName: auth-secret

---

kind: Service

apiVersion: v1

metadata:

name: docker-registery

spec:

# Expose the service on a static port on each node

# so that we can access the service from outside the cluster

type: NodePort

selector:

app: registry

ports:

# Three types of ports for a service

# nodePort - a static port assigned on each the node

# port - port exposed internally in the cluster

# targetPort - the container port to send requests to

- nodePort: 30001

port: 5000

targetPort: 5000Use the option “-n cidi-pipeline” when applying the YAML config file to ensure they are created in cidi-pipeline :

kubectl create -f docker-registry-pod.yaml -n cicd-pipelineTo verify that everything is working properly, use the following command to log in to the local registry from outside the cluster.

docker login 127.0.0.1:30001 -u admin -p 123456If everything is OK, you should get “Login Succeeded” message.

Setup GitBlit on Kubernetes

Gitblit is an open-source Git server that is designed to be easy to set up, configure and use. It provides a web GUI for managing repositories, which allows users to perform various Git operations, such as cloning, branching, merging, and pushing. It’s lightweight and easy to deploy, which makes it popular choice for small teams. Setting up GitBlit in Kubernetes involves a three steps:

- Create PV and PVC for repositories storage

- Create a Kubernetes Pod for GitBlit and attach volume

- Create a service for the Pod

Create PV and PVC using below config :

apiVersion: v1

kind: PersistentVolume

metadata:

name: gitblit-repo-pv

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

hostPath:

path: /tmp/gitblitrepository

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: gitblit-repo-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2GiGitblit Kubernetes Pod:

apiVersion: v1

kind: Pod

metadata:

name: gitblit-pod

labels:

app: gitblit

spec:

containers:

- name: gitblit

image: gitblit/gitblit:latest

ports:

- containerPort: 8443

- containerPort: 8080

- containerPort: 9418

- containerPort: 29418

volumeMounts:

- name: gitblit-data

mountPath: /opt/gitblit-data

volumes:

- name: gitblit-data

persistentVolumeClaim:

claimName: gitblit-repo-pvc Gitblit Kubernetes service :

apiVersion: v1

kind: Service

metadata:

name: gitblit-service

spec:

selector:

app: gitblit

ports:

- name: https

protocol: TCP

port: 8443

targetPort: 8443

nodePort: 30443

- name: http

protocol: TCP

port: 8080

targetPort: 8080

nodePort: 30080

- name: git

protocol: TCP

port: 9418

targetPort: 9418

nodePort: 30418

- name: ssh

protocol: TCP

port: 29418

targetPort: 29418

nodePort: 30419

type: NodePortYou can either apply all of above configs using K8s dashboard or apply them in command line as yml file like this :

kubectl create -f gitblit-volume.yaml -n cicd-pipeline

kubectl create -f gitblit-pod.yaml -n cicd-pipeline

kubectl create -f gitblit-service.yaml -n cicd-pipeline

If everything is OK, after creating the service, by opening https://your-node-ip:30443 you should see Gitblit home page, however, due to invalid cert, you’ll get insecure connection warning :

Setup Jenkins In Local Kubernetes

To setup Jenkins in Kubernetes apply the following config in Kubernetes either using kubectl or Kubernetes dashboard in cicd-pipeline name space :

apiVersion: v1

kind: PersistentVolume

metadata:

name: jenkins-repo-pv

spec:

capacity:

storage: 3Gi

accessModes:

- ReadWriteOnce

hostPath:

path: /tmp/jenkinsData

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: jenkins-repo-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 3Gi

apiVersion: v1

kind: Pod

metadata:

name: jenkins

labels:

app: jenkins

spec:

containers:

- name: jenkins

image: jenkins/jenkins:lts

ports:

- containerPort: 8080

volumeMounts:

- name: jenkins-data

mountPath: /var/jenkins_home

volumes:

- name: jenkins-data

persistentVolumeClaim:

claimName: jenkins-repo-pvc

apiVersion: v1

kind: Service

metadata:

name: jenkins

labels:

app: jenkins

spec:

selector:

app: jenkins

type: NodePort

ports:

- port: 8080

targetPort: 8080

nodePort: 30088As you can see, this time, instead of applying separate config files, I put all steps of creating volume, creating pod and creating service in single config file. In case you want to apply in command line, you can execute this command :

kubectl create -f jenkins.yaml -n cicd-pipeline

After creating the service, you can reach Jenkins web UI at http://your-node-ip:30088. At first, Jenkins ask for a initial administrator password.

Use kubectl to get the password from initialAdminPassword file within the Jenkins pod :

kubectl exec -it jenkins-pod -n cidi-pipeline -- cat /var/jenkins_home/secrets/

initialAdminPasswordRunning above command will yield a password string, copy it and past in Jenkins and click on the button to start initial Jenkins setup. In the next step choose “Install suggested plugin”. It will install all required plugin that you need in CI/CD process :

After completing plugin installation, you will be asked for choosing a user name password for admin. Fill the form and finish the setup:

The Jenkins setup is finished, now you can login in with user name and password you have chosen in previous step and start working :

Now that we have installed 4 main pillars of the pipeline, we can shape and configure our pipeline to start the production. I will explain further steps in the next part of this article. Keep in touch!